Hive Create Table Stored As Parquet

For example if your data pipeline produces Parquet files in the HDFS directory useretldestination you might create an external table as follows. Parquet columnar storage format in Hive 0130 and later.

Data Pipelines With Flink Sql On Ververica Platform

CREATE EXTERNAL TABLE external_parquet c1 INT c2 STRING c3 TIMESTAMP STORED AS PARQUET LOCATION useretldestination.

Hive create table stored as parquet. Data will be converted into parquet file format implicitely while loading the data. CREATE TABLE parquet_table_name x INT y STRING STORED AS PARQUET. Last but not least each student should.

STORED AS INPUTFORMAT. Download or create sample csv. The following examples show you how to create managed tables and similar syntax can be applied to create external tables if Parquet Orc or Avro format already exist in HDFS.

We believe this approach is superior to simple flattening of nested name spaces. Next log into hive beeline or Hue create tables and load some data. Parquet is built to support very efficient.

Unfortunately its not possible to create external table on a single file in Hive just for directories. Otherwise the SQL parser uses the CREATE TABLE USING syntax to parse it and creates a Delta table by default. A MapReduce job will.

We can use regular insert query to load data into parquet file format table. For more information see Creating a Table from Query Results CTAS Examples of CTAS Queries and Using CTAS and INSERT INTO for ETL and Data Analysis. SqlContextsql select from 20181121_SPARKHIVE_431591show I am storing data as a parquet file on hdfs sel sqlContextsql select from 20181121_SPARKHIVE_431591 selwriteparquet testparquet_nwpartitionBy productID trying to use prev location as parquet table location sqlContextsql CREATE.

For example if your data pipeline produces Parquet files in the HDFS directory useretldestination you might create an external table as follows. Hope this helps. In this example were creating a TEXTFILE table and a PARQUET table.

To convert data into Parquet format you can use CREATE TABLE AS SELECT CTAS queries. Parquet is built from the ground up with complex nested data structures in mind and uses the record shredding and assembly algorithm described in the Dremel paper. This page shows how to create Hive tables with storage file format as Parquet Orc and Avro via Hive SQL HQL.

This is my hive table. Use ROW FORMAT SERDE. See the Databricks Runtime 80 migration guide for details.

Create Table with Parquet Orc Avro - Hive SQL. Note that if the table is created in Big SQL and then populated in Hive then this table property can also be used to enable SNAPPY compression. In Databricks Runtime 80 and above you must specify either the STORED AS or ROW FORMAT clause.

For an external Partitioned table we need to update the partition metadata as the hive will not be aware of these partitions unless the explicitly updated. Stored as Parquet format for the Parquet columnar storage format in Hive 0130 and later. The following options can be used to specify the storage format serde input format output format eg.

Insert testcsv into Hadoop directory testing. If usersfileparquet is the only file in the directory you can indicate location as users and Hive will catch up your file. Next we need to create a cross-table that creates relations between students and classes.

The solution is to create dynamically a table from avro and then create a new table of parquet format from the avro one. CREATE TABLE src id int USING hive OPTIONS fileFormat parquet. More on this can be found from hive DDL Language Manual.

By default we will read the table files as plain text. 2 Load data into hive table. Hive table with parquet data showing 0 records.

PARQUET is a columnar store that gives us advantages for storing and scanning data. CREATE four Tables in hive. CREATE TABLE IF NOT EXISTS hqltransactions_copy STORED AS PARQUET AS SELECT FROM hqltransactions.

Create table employee_parquet name stringsalary intdeptno intDOJ date row format delimited fields terminated by stored as Parquet. Before Hive 080 CREATE TABLE LIKE view_name would make a copy of the view. CREATE EXTERNAL TABLE external_parquet c1 INT c2 STRING c3 TIMESTAMP STORED AS PARQUET LOCATION useretldestination.

Storing the data column-wise allows for better compression which gives us faster scans while using less storage. Create hive table with avro orc and parquet file formats. There is the source code from Hive which this helped you.

In Hive 080 and later releases CREATE TABLE LIKE view_name creates. In Hive 010 011 or 012. CREATE TABLE IF NOT EXISTS universityclasses classid INT studyname STRING classname STRING STORED AS PARQUET.

CREATE TABLE IF NOT EXISTS universityenrollment classid INT studentid INT STORED AS PARQUET. Hive CREATE TABLE inv_hive_parquet trans_id int product varchar50 trans_dt date PARTITIONED BY year int STORED AS PARQUET TBLPROPERTIES PARQUETCOMPRESSSNAPPY. Using Parquet Tables in Hive To create a table named PARQUET_TABLE that uses the Parquet format use a command like the following substituting your own table name column names and data types.

Insert Into Hive Table Is Taking Lot Of Time From Spark Sql Performance Issue Stack Overflow

Hive Imports Csv Into The Table And Stores It In Parquet Format Programmer Sought

Hive Orc Returns Nulls Stack Overflow

Loading Parquet File Into A Hive Table Stored As Parquet Fail Values Are Null Stack Overflow

Solve The Problem Of Parquet Format Table Generated By Spark Saveastable Programmer Sought

How To Load A Parquet File Into A Hive Table Using Spark Stack Overflow

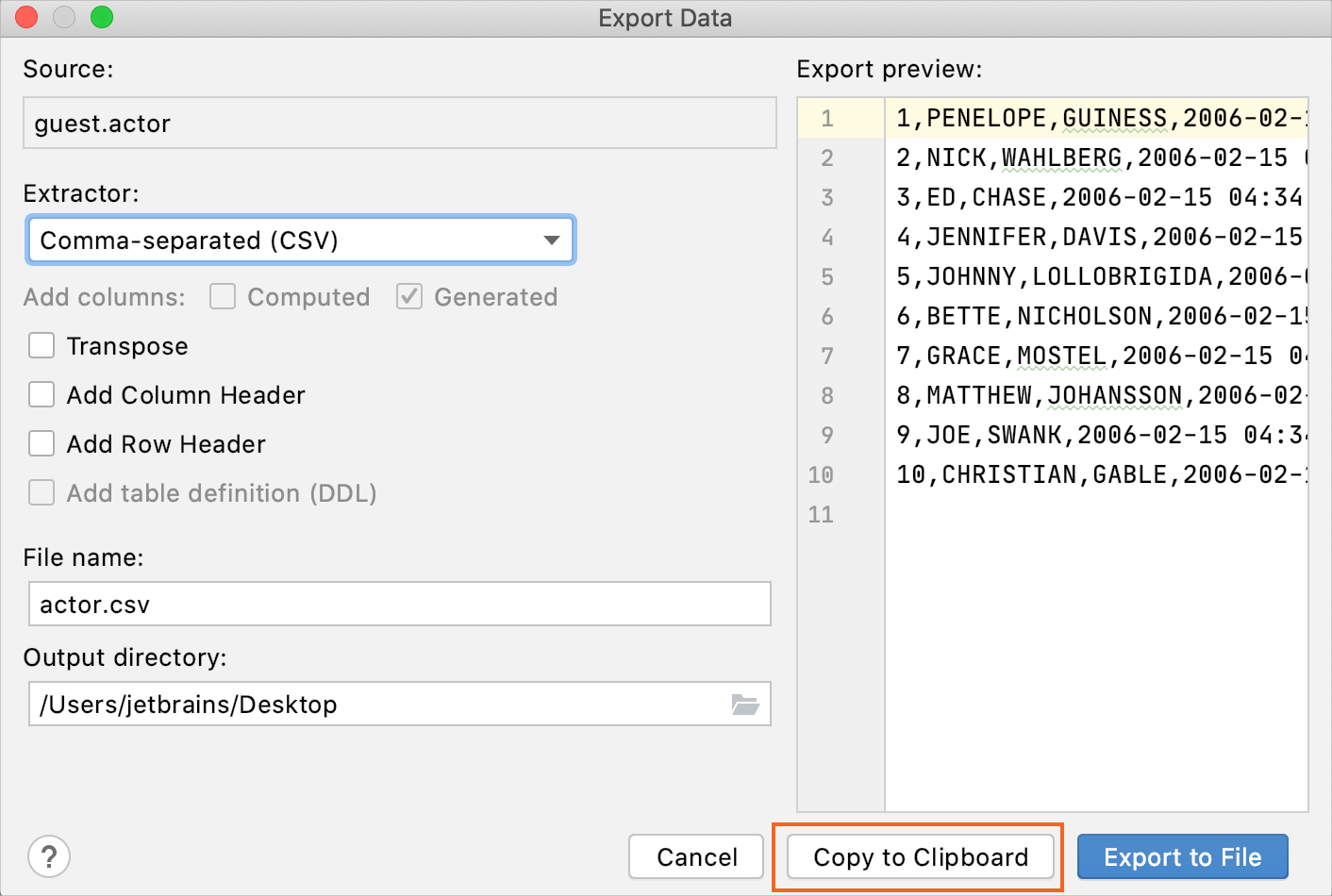

Export Data In Datagrip Datagrip

Smart Data Access With Hadoop Hive Impala Sap Blogs

0607 6 1 0 How To Convert A Hive Table In Orc Format That Uses Date Type To Parquet Table Programmer Sought

Detailed Hive Table Statement Create Table Programmer Sought

Hadoop Lessons How To Create Hive Table For Parquet Data Format File

0607 6 1 0 How To Convert A Hive Table In Orc Format That Uses Date Type To Parquet Table Programmer Sought

How To Load A Parquet File Into A Hive Table Using Spark Stack Overflow

Hive Create Table Command Hives Command Create

Query Parquet Format Table Exceptions Programmer Sought

Insert Into Hive Table Is Taking Lot Of Time From Spark Sql Performance Issue Stack Overflow

Create Table With Parquet Orc Avro Hive Sql Kontext

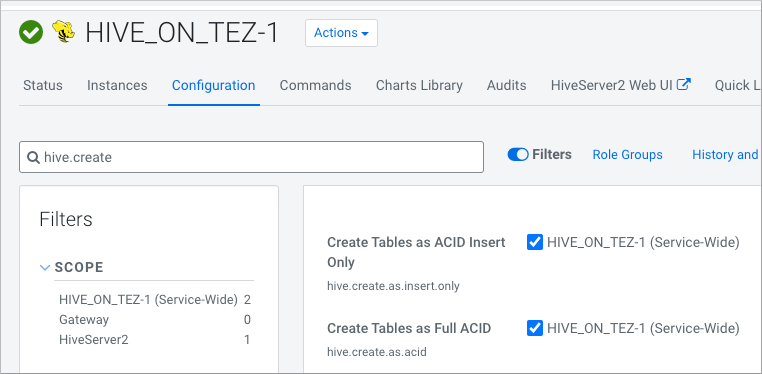

Configuring Legacy Create Table Behavior Cdp Public Cloud

0607 6 1 0 How To Convert A Hive Table In Orc Format That Uses Date Type To Parquet Table Programmer Sought

Posting Komentar untuk "Hive Create Table Stored As Parquet"